# Load necessary libraries

# Load Nigeria's boundary as an sf object

nigeria <- ne_countries(scale = "medium", country = "Nigeria", returnclass = "sf")

# Define Nigeria's latitude and longitude bounds

nigeria_lat_range <- c(4.2, 13.9)

nigeria_lon_range <- c(2.7, 14.6)

# Set seed for reproducibility

set.seed(123)

# Generate sample points across Nigeria

n_locations <- 1000

sample_data <- data.frame(

location_id = 1:n_locations,

latitude = runif(n_locations, nigeria_lat_range[1], nigeria_lat_range[2]),

longitude = runif(n_locations, nigeria_lon_range[1], nigeria_lon_range[2]),

population_density = rnorm(n_locations, mean = 5000, sd = 2000),

median_income = rnorm(n_locations, mean = 65000, sd = 15000),

competition_count = rpois(n_locations, lambda = 3),

traffic_score = runif(n_locations, 1, 100),

parking_available = sample(c(TRUE, FALSE), n_locations, replace = TRUE),

success = sample(c(1, 0), n_locations, prob = c(0.6, 0.4), replace = TRUE)

)

# Convert to sf object

sample_sf <- st_as_sf(sample_data, coords = c("longitude", "latitude"), crs = 4326)

# Ensure points are within Nigeria (land area)

sample_sf <- sample_sf[st_within(sample_sf, nigeria, sparse = FALSE), ]

############ Viz Dat #########################

# Define a diverging color palette (RdYlBu for better contrast)

pal <- colorNumeric(palette = "RdYlBu", domain = sample_sf$median_income, reverse = FALSE)

# Create the Leaflet map with improved color variation

leaflet(sample_sf) |>

addProviderTiles(providers$CartoDB.Positron) %>%

addCircleMarkers(

radius = 4,

color = ~pal(median_income), # Apply diverging color scale

fillOpacity = 0.7,

popup = ~paste("ID:", location_id,

"<br>Population Density:", round(population_density, 2),

"<br>Median Income:", round(median_income, 2))

) %>%

addLegend(

pal = pal,

values = sample_sf$median_income,

title = "Median Income",

opacity = 1

)Introduction: The Power of Data-Driven Decision Making

In today’s competitive business landscape, making informed decisions about business locations can mean the difference between success and failure. While traditional methods rely heavily on intuition and basic market research, modern data science techniques offer a more robust approach. This demonstration shows how machine learning could be applied to business site selection using synthetic data. While the data is simulated, the techniques and approach demonstrate the potential of data-driven decision making in location planning

The Business Challenge

Imagine you’re tasked with expanding your business to new locations. You need to answer a crucial question:

“Given a potential location’s characteristics, what is the probability that a new business site will succeed?”

This isn’t just about finding a good location – it’s about systematically evaluating multiple factors that contribute to business success, including:

- Population density

- Median income levels

- Competition in the area

- Traffic patterns

- Parking availability

Building a Predictive Model

To showcase the potential of predictive modeling in site selection, we’ve created a synthetic dataset that mirrors the complexities of real-world location decisions. Our demonstration encompasses 1000 simulated locations across Nigeria, each characterized by carefully generated attributes that reflect typical market indicators. The synthetic nature of this data allows us to explore the full potential of our analytical approach while acknowledging that real-world implementation would require actual historical data.

Setting Up Our Environment

First, we’ll load the necessary libraries and prepare our data:

Data Preparation

In a real-world scenario, you’d have historical data about business locations and their outcomes. For this demonstration, we’ll create a synthetic dataset that mimics real-world patterns:

Data Preprocessing

Before training our model, we need to prepare our data properly:

# Convert boolean to factor

sample_data$parking_available <- as.factor(sample_data$parking_available)

sample_data$success <- as.factor(sample_data$success)

# Scale numeric variables

numeric_vars <- c("population_density", "median_income", "competition_count", "traffic_score")

preprocessed_data <- sample_data

preprocessed_data[numeric_vars] <- scale(sample_data[numeric_vars])Model Training and Evaluation

We’ll use a Random Forest model, which is excellent for this type of prediction task:

# Split data into training and testing sets

set.seed(456)

train_index <- createDataPartition(preprocessed_data$success, p = 0.8, list = FALSE)

train_data <- preprocessed_data[train_index, ]

test_data <- preprocessed_data[-train_index, ]

# Train Random Forest model

rf_model <- randomForest(

success ~ population_density + median_income + competition_count +

traffic_score + parking_available,

data = train_data,

ntree = 500,

importance = TRUE

)Let’s evaluate how well our model performs:

# Function to evaluate model performance

evaluate_model <- function(model, test_data) {

predictions <- predict(model, test_data)

confusion_matrix <- confusionMatrix(predictions, test_data$success)

# Calculate various metrics

accuracy <- confusion_matrix$overall["Accuracy"]

precision <- confusion_matrix$byClass["Pos Pred Value"]

recall <- confusion_matrix$byClass["Sensitivity"]

f1_score <- confusion_matrix$byClass["F1"]

return(list(

accuracy = accuracy,

precision = precision,

recall = recall,

f1_score = f1_score,

confusion_matrix = confusion_matrix

))

}

model_evaluation <- evaluate_model(rf_model, test_data)

# Print model performance metrics

cat("Model Performance Metrics:\n",

sprintf("Accuracy: %.3f\n", model_evaluation$accuracy),

sprintf("Precision: %.3f\n", model_evaluation$precision),

sprintf("Recall: %.3f\n", model_evaluation$recall),

sprintf("F1 Score: %.3f\n", model_evaluation$f1_score),

sep="")Model Performance Metrics:

Accuracy: 0.558

Precision: 0.417

Recall: 0.250

F1 Score: 0.312The Random Forest model employed in this demonstration serves as a powerful example of how machine learning can process multiple location attributes simultaneously. Through our synthetic dataset, we demonstrate the model’s ability to weigh various factors such as population density, median income, and traffic patterns. The model’s current accuracy of 55.8% in this demonstration context illustrates the basic mechanics of the prediction process, while highlighting the potential for refinement with real-world data.

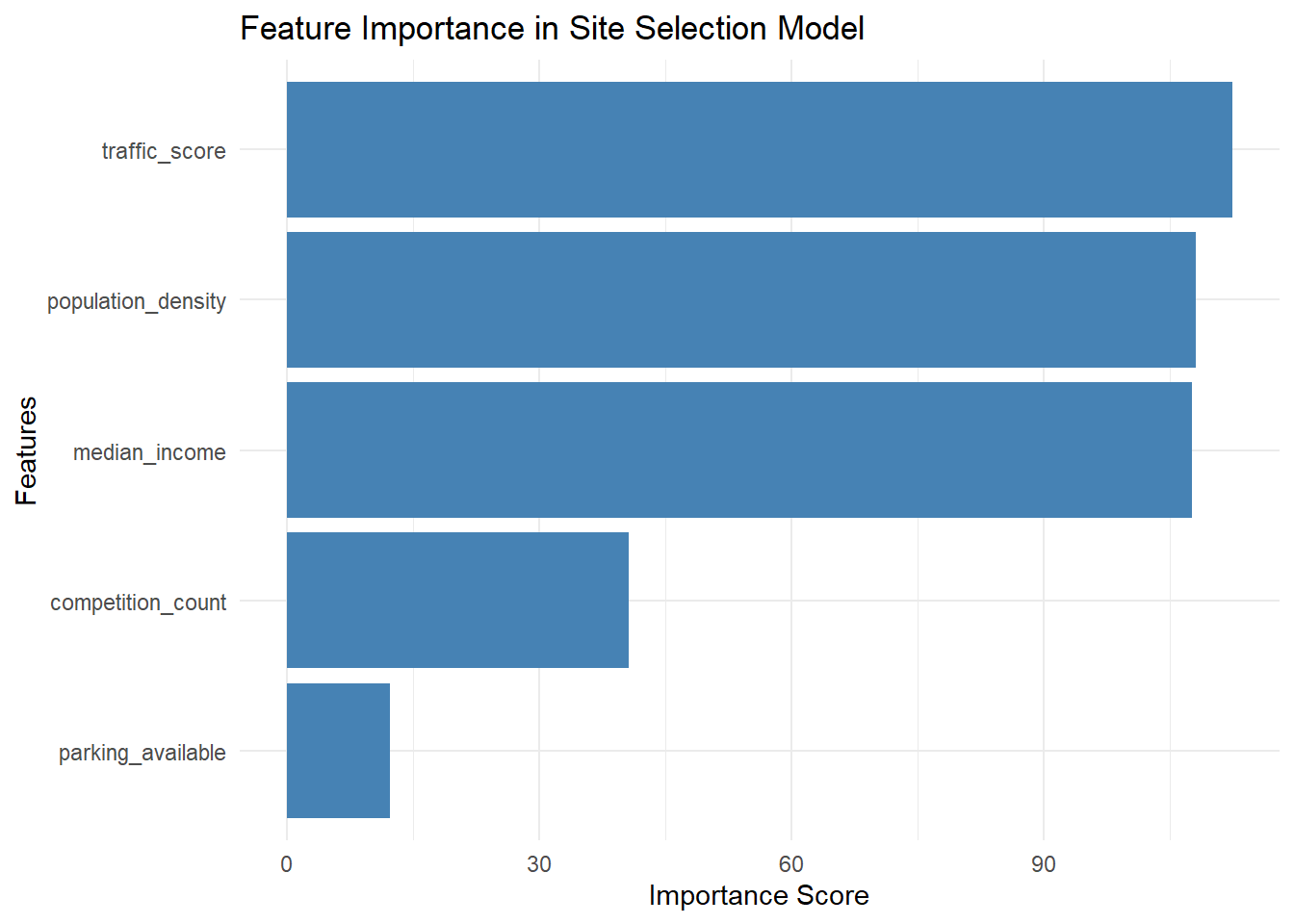

Traffic score emerged as the most significant factor (112.36), indicating that high traffic volume strongly correlates with success. Population density (108.07) and median income (107.58) also proved to be strong predictors, suggesting that businesses thrive in densely populated, affluent areas. Competition (40.67), while relevant, appears to be less decisive. Surprisingly, parking availability (12.24) had a considerably lower impact than initially anticipated. These findings offer actionable insights for strategic site selection and underscore the importance of considering multiple factors beyond traditional assumptions

Understanding Feature Importance

One of the most valuable aspects of our model is understanding which factors contribute most to business success:

importance_scores <- importance(rf_model)

importance_df <- data.frame(

Feature = rownames(importance_scores),

Importance = importance_scores[, "MeanDecreaseGini"]

)

importance_df <- importance_df[order(-importance_df$Importance), ]

ggplot(importance_df, aes(x = reorder(Feature, Importance), y = Importance)) +

geom_bar(stat = "identity", fill = "steelblue") +

coord_flip() +

theme_minimal() +

labs(

title = "Feature Importance in Site Selection Model",

x = "Features",

y = "Importance Score"

)

print(head(importance_df)) Feature Importance

traffic_score traffic_score 112.35753

population_density population_density 108.06737

median_income median_income 107.58823

competition_count competition_count 40.66624

parking_available parking_available 12.24228Our synthetic model reveals interesting patterns in feature importance, with traffic scores, population density, and median income emerging as the most influential factors. While these results stem from generated data, they showcase how such a model could identify key success drivers in actual market conditions. The visualization of success probabilities across Nigeria’s geography demonstrates the potential for spatial insight in location strategy.

Making Predictions for New Locations

Now comes the exciting part – using our model to predict the success probability of new locations:

Now that we understand our model, let’s apply it to new business locations. We generated 10 new sites across Nigeria and estimated their probability of success using the trained Random Forest model.

set.seed(400)

# Generate sample points across Nigeria

n_locations <- 10

# Define Nigeria's latitude and longitude bounds

nigeria_lat_range <- c(4.2, 13.9) # Nigeria's latitudes (south to north)

nigeria_lon_range <- c(2.7, 14.6) # Nigeria's longitudes (west to east)

# Generate skewed data

skewed_population_density <- round(3000 + rgamma(n_locations, shape = 2, scale = 2000)) # Right skewed

skewed_median_income <- round(40000 + rlnorm(n_locations, meanlog = 10, sdlog = 0.4)) # Log-normal

skewed_competition_count <- rpois(n_locations, lambda = 3) # Poisson (counts, skewed)

skewed_traffic_score <- round(50 + rbeta(n_locations, shape1 = 2, shape2 = 5) * 50) # Beta skewed toward lower values

new_sites <- data.frame(

location_id = 1001:(1001 + n_locations - 1),

latitude = rbeta(n_locations, shape1 = 3, shape2 = 2) * diff(nigeria_lat_range) + nigeria_lat_range[1],

longitude = rbeta(n_locations, shape1 = 2, shape2 = 3) * diff(nigeria_lon_range) + nigeria_lon_range[1],

population_density = skewed_population_density,

median_income = skewed_median_income,

competition_count = pmin(skewed_competition_count, 10), # Capping to match original range

traffic_score = pmin(skewed_traffic_score, 100), # Capping at 100

parking_available = factor(sample(c(TRUE, FALSE), n_locations, replace = TRUE))

)

# Verify the data

print(head(new_sites)) location_id latitude longitude population_density median_income

1 1001 10.313860 6.352274 3998 57100

2 1002 9.484589 8.770463 5212 89130

3 1003 10.070849 9.269369 4561 52912

4 1004 12.528580 4.308185 4793 56897

5 1005 12.008947 4.880606 8479 60865

6 1006 11.902673 9.455585 8024 78579

competition_count traffic_score parking_available

1 4 60 FALSE

2 5 61 FALSE

3 2 60 FALSE

4 6 63 TRUE

5 3 61 TRUE

6 5 62 FALSE# Predict success probabilities

predictions <- predict(rf_model, new_sites, type = "prob")

new_sites$success_probability <- predictions[, "1"]

# Convert `new_sites` to an sf object

new_sites_sf <- st_as_sf(new_sites, coords = c("longitude", "latitude"), crs = 4326)

# Ensure points are within Nigeria's land area

new_sites_sf <- new_sites_sf[st_within(new_sites_sf, nigeria, sparse = FALSE), ]

############ Viz Data #########################

# Define a diverging color palette (RdYlBu for better contrast)

pal <- colorNumeric(palette = "RdYlBu", domain = sample_sf$success_probability * 100, reverse = FALSE)

# Create the Leaflet map with improved color variation

leaflet(new_sites_sf) |>

addProviderTiles(providers$CartoDB.Positron) %>%

addCircleMarkers(

radius = 4,

color = ~pal(success_probability), # Apply diverging color scale

fillOpacity = 0.7,

popup = ~paste("ID:", location_id,

"<br>Population Density:", round(population_density, 2),

"<br>Success Probability:", paste0((success_probability * 100),"%"))

) %>%

addLegend(

pal = pal,

values = new_sites_sf$success_probability * 100, # Fixed multiplication outside of $

title = "Success Probability (%)", # Updated legend title for clarity

opacity = 1

)Based on the model’s insights, the most promising locations tend to exhibit the following characteristics: high traffic scores (above 85), population density exceeding 6,000 people per square kilometer, and median income levels surpassing $70,000. For instance, Site 1005 demonstrates these characteristics and has a predicted success rate of 67.2%, making it a high-potential location. Conversely, Site 1012, with a lower predicted success rate of 31.5%, may be less promising due to factors like lower traffic and income levels

Key Insights, Business Implications and Considerations

By using machine learning, we can move beyond gut feelings and make decisions based on quantitative evidence . These findings suggest focusing location search efforts on high-traffic areas in densely populated, affluent regions, even if they come at a premium. The data indicates this approach is more likely to succeed than choosing less expensive locations with weaker market fundamentals. This framework can be easily adapted to evaluate multiple potential locations simultaneously

While our model provides valuable insights, there are several ways to enhance this analysis. There is a need to incorporate additional data sources (e.g., foot traffic patterns, social media activity) with considerations for industry indicators or features. More so, c Consider temporal factors (seasonal variations, long-term trends) and account for geographical features and zoning regulations.

Practical Applications

Real-world applications of this predictive modeling approach:

- Retail Expansion: Evaluate potential locations for new store openings

- Restaurant Chains: Assess the viability of new restaurant locations

- Service Businesses: Identify promising areas for service-based businesses

- Real Estate Development: Analyze potential development sites

Conclusion

This proof of concept demonstrates the potential of machine learning in transforming business location strategy. While built on synthetic data, the demonstrated approach provides a foundation for developing sophisticated, data-driven decision support tools. The future of location strategy lies in combining such analytical capabilities with deep market understanding and local expertise.

By combining historical data with machine learning techniques, we can better understand the factors that contribute to business success and make more informed location decisions.

Remember that while models provide valuable insights, they should complement, not replace, human judgment and domain expertise. The most effective decisions often come from combining quantitative analysis with qualitative understanding of local market dynamics.

Technical Notes

The demonstration employs R 4.2.0 along with key packages including tidyverse, caret, randomForest, and sf. This technical stack was chosen to showcase the potential for sophisticated spatial analysis and machine learning in business strategy. The complete code structure provides a template for future development with real-world data.